飞腾派是由萤火工场研发的一款面向行业工程师、学生和爱好者的开源硬件。飞腾派正在iCEasy商城和天猫旗舰店火热售卖中!小伙伴们可以直接登录iCEasy商城网站www.iceasy.com搜索“飞腾派”下单,或者在iCEasy商城小程序内下单!

引言

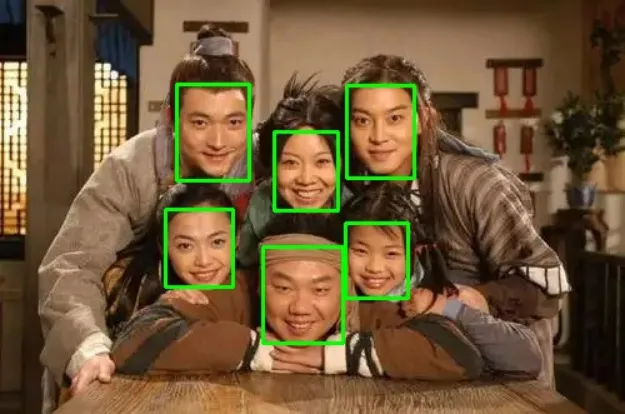

飞腾派作为采用国产自主研发的嵌入式处理器的全国产“派”,欲在工业生产落地应用,关于机器视觉的应用是绕不开的,因此本文章将带来飞腾派的机器视觉能力人脸识别测试。

国产自研开发板 飞腾派

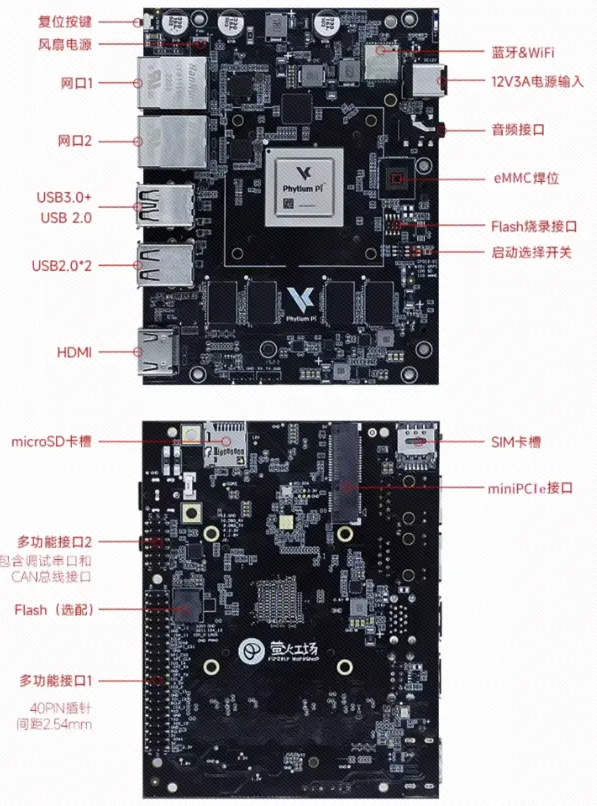

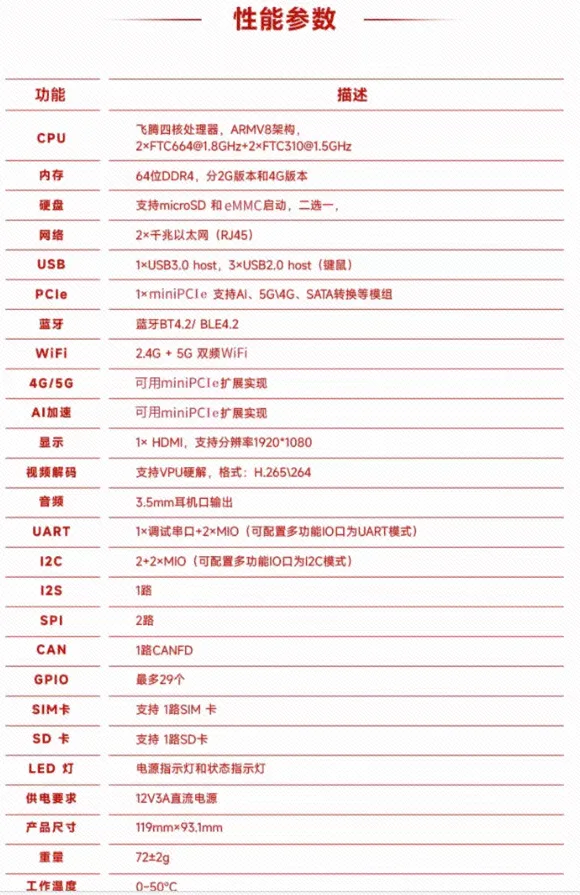

飞腾派是由萤火工场研发的一款面向行业工程师、学生和爱好者的国产自主可控开源硬件。主板处理器采用飞腾嵌入式四核处理器,该处理器兼容 ARM V8 指集,包含2个FTC664 核和2个FTC310 核,其中FTC664 核主频可达1.8GHzFTC310 核主频可达 1.5GHz。主板板载 64 位 DDR4 内存分2G 和 4G 两个版本,支持 SD 或者 eMMC 外部存储。主板板载 WiFi 蓝牙,陶瓷天线,可快速连接无线通信。另外还集成了大量外设接口,包括双路千兆以太网、USB、UART、CAN、HDMI、音频等接口,集成一路 miniPCIE 接口,可实现 A 加速卡与4G、5G 通信等多种功能模块的扩展。

主板操作系统支持支持国内 OpenKylin、OpenHarmony、SylixOS.RT-Thread 、Deepin等国产操作系统, 也支持Ubuntu、Debian 等国外主流开源操作系统。

准备阶段

飞腾派在使用之前都需要准备一块足够容量的内存卡,并将系统镜像烧入到卡中,以启动“派”,飞腾派支持多种操作系统例如操作Ubuntu、Debian、Yocto、OpenKylin、OpenHarmony、SylixOS.RT-Thread 、Deepin等开源操作系统,本次评测选用基于Debian11的飞腾派OS作为主要开发系统。

设置编译器

$ export PATH=$PATH:${YOUR_PATH}/riscv/bin$ export CC=riscv64-unknown-linux-gnu-gcc$ export CXX=riscv64-unknown-linux-gnu-g++ 模型部分

MNN的使用较为简单,使用方法类似TensorFlow 1.x,简要的流程为:

// 创建会话createSession();// 设置输入getSessionInput();// 运行会话runSession();// 获取输出getSessionOutput();// 创建会话createSession();// 设置输入getSessionInput();// 运行会话runSession();// 获取输出getSessionOutput();在该逻辑下,将模型的运行预处理,推理,后处理封装为一个模块:// ultraFace.hpp#ifndef UltraFace_hpp#define UltraFace_hpp#pragma once#include "MNN/Interpreter.hpp"#include "MNN/MNNDefine.h"#include "MNN/Tensor.hpp"#include "MNN/ImageProcess.hpp"#include <opencv2/opencv.hpp>#include <algorithm>#include <iostream>#include <string>#include <vector>#include <memory>#include <chrono>#define num_featuremap 4#define hard_nms 1#define blending_nms 2 /* mix nms was been proposaled in paper blaze face, aims to minimize the temporal jitter*/typedef struct FaceInfo {float x1;float y1;float x2;float y2;float score;} FaceInfo;class UltraFace {public:UltraFace(const std::string &mnn_path,int input_width, int input_length, int num_thread_ = 4, float score_threshold_ = 0.7, float iou_threshold_ = 0.3,int topk_ = -1);~UltraFace();int detect(cv::Mat &img, std::vector<FaceInfo> &face_list);private:void generateBBox(std::vector<FaceInfo> &bbox_collection, MNN::Tensor *scores, MNN::Tensor *boxes);void nms(std::vector<FaceInfo> &input, std::vector<FaceInfo> &output, int type = blending_nms);private:std::shared_ptr<MNN::Interpreter> ultraface_interpreter;MNN::Session *ultraface_session = nullptr;MNN::Tensor *input_tensor = nullptr;int num_thread;int image_w;int image_h;int in_w;int in_h;int num_anchors;float score_threshold;float iou_threshold;const float mean_vals[3] = {127, 127, 127};const float norm_vals[3] = {1.0 / 128, 1.0 / 128, 1.0 / 128};const float center_variance = 0.1;const float size_variance = 0.2;const std::vector<std::vector<float>> min_boxes = {{10.0f, 16.0f, 24.0f},{32.0f, 48.0f},{64.0f, 96.0f},{128.0f, 192.0f, 256.0f}};const std::vector<float> strides = {8.0, 16.0, 32.0, 64.0};std::vector<std::vector<float>> featuremap_size;std::vector<std::vector<float>> shrinkage_size;std::vector<int> w_h_list;std::vector<std::vector<float>> priors = {};};#endif /* UltraFace_hpp */// ultraFace.cpp#define clip(x, y) (x < 0 ? 0 : (x > y ? y : x))#include "UltraFace.hpp"using namespace std;UltraFace::UltraFace(const std::string &mnn_path,int input_width, int input_length, int num_thread_,float score_threshold_, float iou_threshold_, int topk_) {num_thread = num_thread_;score_threshold = score_threshold_;iou_threshold = iou_threshold_;in_w = input_width;in_h = input_length;w_h_list = {in_w, in_h};for (auto size : w_h_list) {std::vector<float> fm_item;for (float stride : strides) {fm_item.push_back(ceil(size / stride));}featuremap_size.push_back(fm_item);}for (auto size : w_h_list) {shrinkage_size.push_back(strides);}/* generate prior anchors */for (int index = 0; index < num_featuremap; index++) {float scale_w = in_w / shrinkage_size[0][index];float scale_h = in_h / shrinkage_size[1][index];for (int j = 0; j < featuremap_size[1][index]; j++) {for (int i = 0; i < featuremap_size[0][index]; i++) {float x_center = (i + 0.5) / scale_w;float y_center = (j + 0.5) / scale_h;for (float k : min_boxes[index]) {float w = k / in_w;float h = k / in_h;priors.push_back({clip(x_center, 1), clip(y_center, 1), clip(w, 1), clip(h, 1)});}}}}/* generate prior anchors finished */num_anchors = priors.size();ultraface_interpreter = std::shared_ptr<MNN::Interpreter>(MNN::Interpreter::createFromFile(mnn_path.c_str()));MNN::ScheduleConfig config;config.numThread = num_thread;MNN::BackendConfig backendConfig;backendConfig.precision = (MNN::BackendConfig::PrecisionMode) 2;config.backendConfig = &backendConfig;ultraface_session = ultraface_interpreter->createSession(config);input_tensor = ultraface_interpreter->getSessionInput(ultraface_session, nullptr);}UltraFace::~UltraFace() {ultraface_interpreter->releaseModel();ultraface_interpreter->releaseSession(ultraface_session);}int UltraFace::detect(cv::Mat &raw_image, std::vector<FaceInfo> &face_list) {if (raw_image.empty()) {std::cout << "image is empty ,please check!" << std::endl;return -1;}image_h = raw_image.rows;image_w = raw_image.cols;cv::Mat image;cv::resize(raw_image, image, cv::Size(in_w, in_h));ultraface_interpreter->resizeTensor(input_tensor, {1, 3, in_h, in_w});ultraface_interpreter->resizeSession(ultraface_session);std::shared_ptr<MNN::CV::ImageProcess> pretreat(MNN::CV::ImageProcess::create(MNN::CV::BGR, MNN::CV::RGB, mean_vals, 3,norm_vals, 3));pretreat->convert(image.data, in_w, in_h, image.step[0], input_tensor);auto start = chrono::steady_clock::now();// run networkultraface_interpreter->runSession(ultraface_session);// get output datastring scores = "scores";string boxes = "boxes";MNN::Tensor *tensor_scores = ultraface_interpreter->getSessionOutput(ultraface_session, scores.c_str());MNN::Tensor *tensor_boxes = ultraface_interpreter->getSessionOutput(ultraface_session, boxes.c_str());MNN::Tensor tensor_scores_host(tensor_scores, tensor_scores->getDimensionType());tensor_scores->copyToHostTensor(&tensor_scores_host);MNN::Tensor tensor_boxes_host(tensor_boxes, tensor_boxes->getDimensionType());tensor_boxes->copyToHostTensor(&tensor_boxes_host);std::vector<FaceInfo> bbox_collection;auto end = chrono::steady_clock::now();chrono::duration<double> elapsed = end - start;cout << "inference time:" << elapsed.count() << " s" << endl;generateBBox(bbox_collection, tensor_scores, tensor_boxes);nms(bbox_collection, face_list);return 0;}void UltraFace::generateBBox(std::vector<FaceInfo> &bbox_collection, MNN::Tensor *scores, MNN::Tensor *boxes) {for (int i = 0; i < num_anchors; i++) {if (scores->host<float>()[i * 2 + 1] > score_threshold) {FaceInfo rects;float x_center = boxes->host<float>()[i * 4] * center_variance * priors[i][2] + priors[i][0];float y_center = boxes->host<float>()[i * 4 + 1] * center_variance * priors[i][3] + priors[i][1];float w = exp(boxes->host<float>()[i * 4 + 2] * size_variance) * priors[i][2];float h = exp(boxes->host<float>()[i * 4 + 3] * size_variance) * priors[i][3];rects.x1 = clip(x_center - w / 2.0, 1) * image_w;rects.y1 = clip(y_center - h / 2.0, 1) * image_h;rects.x2 = clip(x_center + w / 2.0, 1) * image_w;rects.y2 = clip(y_center + h / 2.0, 1) * image_h;rects.score = clip(scores->host<float>()[i * 2 + 1], 1);bbox_collection.push_back(rects);}}}void UltraFace::nms(std::vector<FaceInfo> &input, std::vector<FaceInfo> &output, int type) {std::sort(input.begin(), input.end(), [](const FaceInfo &a, const FaceInfo &b) { return a.score > b.score; });int box_num = input.size();std::vector<int> merged(box_num, 0);for (int i = 0; i < box_num; i++) {if (merged[i])continue;std::vector<FaceInfo> buf;buf.push_back(input[i]);merged[i] = 1;float h0 = input[i].y2 - input[i].y1 + 1;float w0 = input[i].x2 - input[i].x1 + 1;float area0 = h0 * w0;for (int j = i + 1; j < box_num; j++) {if (merged[j])continue;float inner_x0 = input[i].x1 > input[j].x1 ? input[i].x1 : input[j].x1;float inner_y0 = input[i].y1 > input[j].y1 ? input[i].y1 : input[j].y1;float inner_x1 = input[i].x2 < input[j].x2 ? input[i].x2 : input[j].x2;float inner_y1 = input[i].y2 < input[j].y2 ? input[i].y2 : input[j].y2;float inner_h = inner_y1 - inner_y0 + 1;float inner_w = inner_x1 - inner_x0 + 1;if (inner_h <= 0 || inner_w <= 0)continue;float inner_area = inner_h * inner_w;float h1 = input[j].y2 - input[j].y1 + 1;float w1 = input[j].x2 - input[j].x1 + 1;float area1 = h1 * w1;float score;score = inner_area / (area0 + area1 - inner_area);if (score > iou_threshold) {merged[j] = 1;buf.push_back(input[j]);}}switch (type) {case hard_nms: {output.push_back(buf[0]);break;}case blending_nms: {float total = 0;for (int i = 0; i < buf.size(); i++) {total += exp(buf[i].score);}FaceInfo rects;memset(&rects, 0, sizeof(rects));for (int i = 0; i < buf.size(); i++) {float rate = exp(buf[i].score) / total;rects.x1 += buf[i].x1 * rate;rects.y1 += buf[i].y1 * rate;rects.x2 += buf[i].x2 * rate;rects.y2 += buf[i].y2 * rate;rects.score += buf[i].score * rate;}output.push_back(rects);break;}default: {printf("wrong type of nms.");exit(-1);}}}}

看起来运行效果相当不错,仅需55ms左右即可完成推理!

飞腾派V3 现货支持

飞腾派V3 现货发售,支持全四口USB 3.0接口,PWM智能调速散热风扇,国标/美标耳机自动识别等功能,售价649元包邮。

https://www.iceasy.com/product/1875487334855221250

飞腾派资料下载专区

提供飞腾派相关资料下载,包括开发板手册、开源项目文档等。

https://www.iceasy.com/cloud/Phytium?pid=1877646941793374213

开源口碑案例分享

可参考飞腾派开发板的用户评价和社区反馈。

https://www.iceasy.com/review/list